Home About Blog #RED

Home About Blog #RED by Cameron Gould on Sun Jan 22 2023

ChatGPT has taken over the world. In less than two months, the AI tool stormed the web and revolutionized how we interact with the internet and, more importantly, with each other. All over Twitter, there are folks discussing how they use ChatGPT to help generate ideas for new businesses, write or debug code snippets, and even write entire articles or essays. But there was something that stood out to me today.

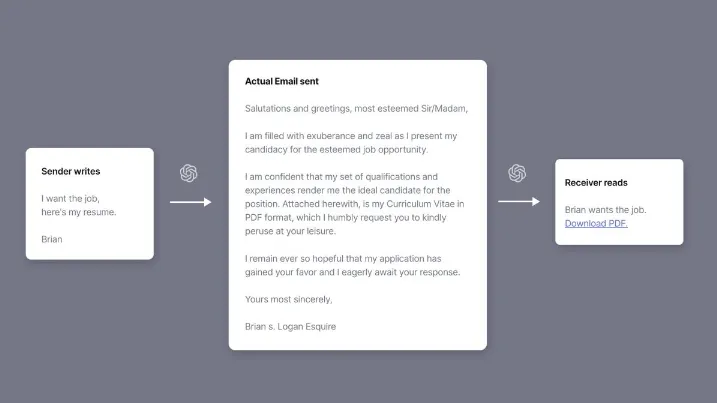

I saw a tweet that described the “future of email” with ChatGPT. It displayed a photo that showed what you would write, what ChatGPT would output, and what the recipient would see.

Clearly, this tweet was a joke about how you’d use ChatGPT to spice up your email to sound more sophisticated, while your recipient would be seeking to only view the most important details of your message. Nonetheless, it made me think quite a bit about what might happen if ChatGPT does take over communication channels.

The first thing that came to my mind was, “that’s no longer you communicating”. I imagined a person sitting in a room, practically responding to an email with their eyes shut. No thought is put into the message whatsoever. Yet still, an email is generated that contains sophisticated language. Everything about this message, aside from the sentiment and purpose, has no actual link to the individual who prompted its generation.

On the other end is another person getting an email. They open it up, and it’s this sophisticated message that, to them, makes no sense. Maybe it’s difficult because their literacy level is below that which is required to understand the message. So they use ChatGPT to summarize the message in ELI5 language.

This example highlights one key benefit of ChatGPT: greater access to communication for those who are relatively illiterate. ChatGPT acts as a superpower here, enabling folks to write things that they maybe didn’t have the competency to do otherwise. For this purpose, I think ChatGPT is amazing. It enables our society to break down barriers that tend to hold less privileged folks down, and that is always a win.

But then there are the other use cases I can imagine where this absolutely will become a problem. I know it because I have already seen it happen without ChatGPT, and I fear that something like ChatGPT will only make it worse. Go online to any social media site, and you’ll see varying degrees of authenticity displayed. Some people post their entire world on social media and are their true selves. But for the vast majority of folks, there is a veil of misdirection that coats their online presence. They start to lack authenticity.

Personally, I’ve seen this at its worst on Instagram. The lengths folks will go to on the platform in order to make their lives look far more interesting than they are is quite disturbing. I’ve heard stories of people saving vacation photos just to post them throughout the year to make it seem like they travel all the time. I’ve witnessed people pose in front of expensive cars they don’t own or show off a thousand dollars they took out of the bank that they’d later use to pay rent. This behavior is common all over Instagram, and it’s widely speculated that consuming all of this content can cause depression since, in contrast, your life just doesn’t seem as interesting as the person posting pictures of themselves in Hawaii every three weeks.

The reason people do these things is a whole discussion on its own, but the point is that authenticity on the internet is already shaky. With ChatGPT, it could completely crumble. Take a moment to imagine what social media would be like 15 years from now. ChatGPT (and even better models) have been around for a really long time, and an entire generation of children have been raised with this tool in hand. Companies and individuals alike are using it to generate most of the content they put online. Captions, emails, essays, articles, portfolios, everything will be generated.

So what happens when you text someone? Are they going to text you back with their words, or ChatGPTs’ words? Are you going to send your own words? And more importantly… if all of this text is generated, is any person really having the conversation? Or are we merely the folks that trigger ChatGPT to have a conversation with itself?

Okay, enough with the existential dystopian exposition. In reality, ChatGPT is a really cool and useful tool that can assist you in many ways, but remember that authenticity matters. Authenticity is the willingness to stay true to yourself, and part of who you are is how you communicate. It’s brave and admirable to be authentic.

In this new world of generative AI, remember that ChatGPT is a tool. Just like any other tool, there’s a time and place for when it ought to be used. When all you have is a hammer, everything looks like a nail. ChatGPT should not be used to try and solve all of your problems. Before you run to it for help on your next essay, email, article, or whatever else, consider that an authentic piece might work better.

This article was written entirely by me, and not ChatGPT :)